From November 28 to December 10, 2025, the exhibition glow in/complete will be held at Shinjuku Ganka Gallery—Space Underground. Following glow - in progress (2023) and glow - in practice (2024), glow members have continued their exploration of the space between thought and practice through media expression.

This exhibition presents new practices emerging from interactions with others and the environment, building upon this accumulated work. in/complete signifies an attitude of listening to the ongoing process of creation, without defining an endpoint. The exhibition opens as a space where inquiry and creation gently intersect.

*Closed Thursday, December 4

*Opens at 4:00 PM on Friday, November 28

*Closes at 5:00 PM on Wednesday, December 3 and Wednesday, December 10

5-18-11 Shinjuku, Shinjuku-ku, Tokyo

Google Maps

Curated + Supervised by: glow

Produced & PR Design: Akiko KYONO

Web Coding: Shoko ITO

Key Visual Production: Ryuichi MARUO (+ Toki MARUO)

Overall Direction: Kyo AKABANE

Cooperation: Institute of Advanced Media Arts and Sciences [IAMAS], Aichi University of the Arts, Marble Corp., asyl Co., Ltd., FLAME Co., Ltd.

Radio no longer reaches our ears as airwaves vibrating through the air, but as data drifting through networks. Streamed audio is endlessly replicated and disseminated, its origin and source remaining ambiguous. Yet within its resonance, a “cultural accent” breathes alongside the voices of distant places.

This work transcribes radio and podcast audio in real time, feeding the resulting text into an image-generating AI at a rate of 20 frames per second. At each moment, language transforms into image, and the granularity of information manifests as the texture of the visuals. Furthermore, the vast array of generated images and their corresponding text data are sequentially archived, allowing the “trajectory of transformation” to be traced later. Following this chain of transcription, one encounters the biases and noise that lurk within the flow as meaning reshapes itself.

Toru YOKOYAMA (v0id), Kensuke TOBITANI (IAMAS), Kyo AKABANE (IAMAS), Shinya KITAJIMA (IAMAS)

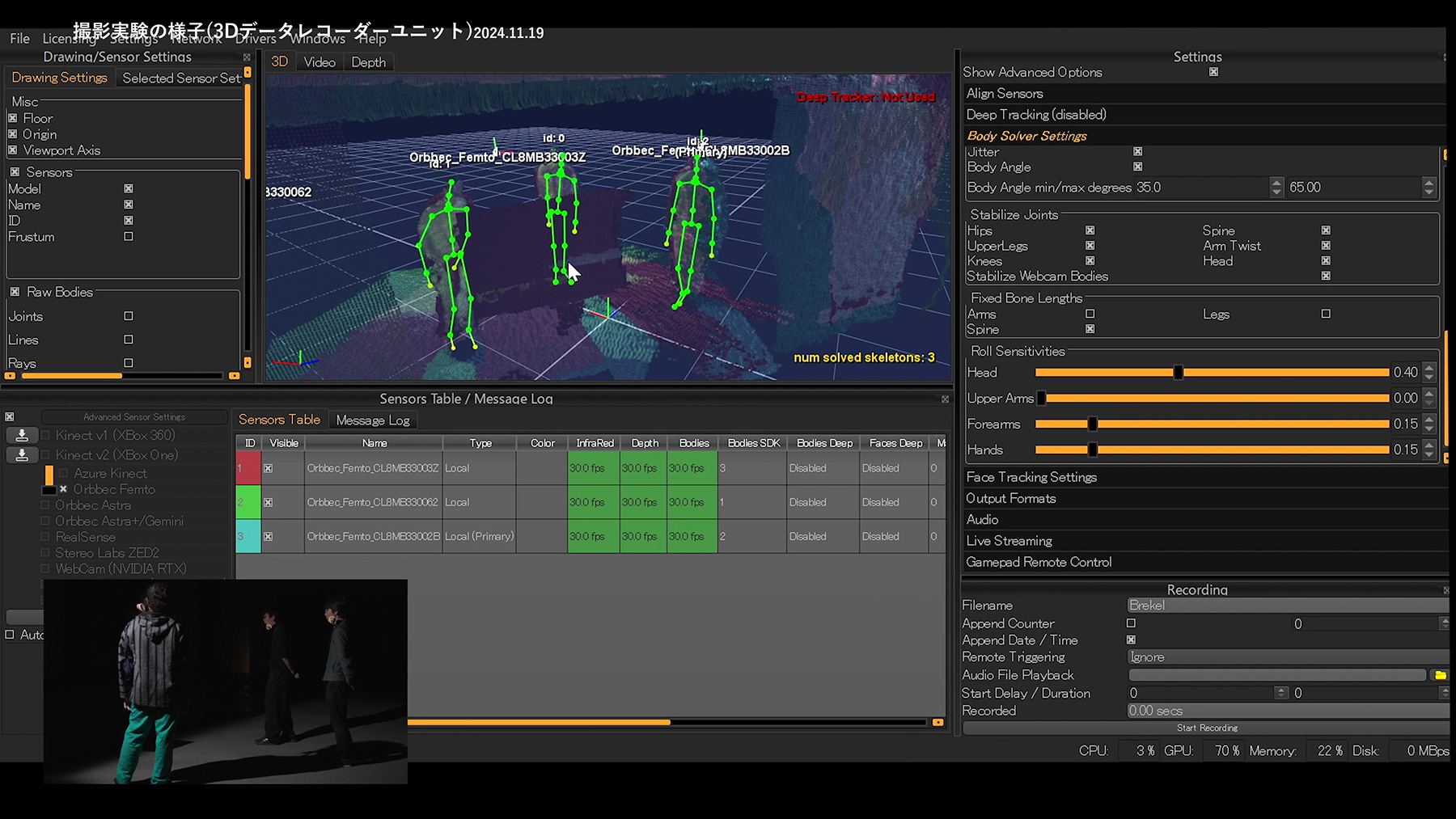

This project explores ways to reconstruct time-based artworks and the viewer’s audiovisual experience within virtual space.

The VR Archive Viewer currently in development enables synchronized playback of heterogeneous data—such as video, binaural sound, and 3D information—allowing users to re-experience the viewer’s sensory perception within VR.

By expanding the possibilities of recording and preserving artworks, the project proposes a new approach to documenting and archiving diverse forms of artistic expression.

Project Lead: Yasunori IKEDA (Aichi University of the Arts)

Co-Investigators: Kyo AKABANE (IAMAS), Kensuke TOBITANI (IAMAS)

Development Cooperation: Koki YAMADA, Masahiro FUSHIDA (Marble Corp.), Akiko KYONO (FLAME Co., Ltd.)

Experimental Cooperation: Takayuki NEGI (Shizuoka University of Art and Culture), Satoshi FUKUSHIMA (Composer), Yu TAKAHASHI (Tangent Design Inc.)

This research was supported by JSPS KAKENHI Grant Number JP23K00238 and by the Nitto Foundation.

MultiChannel MR is an advanced system developed from the VR design platform MultiChannel VR, originally created for spatial video installations. By employing MR technology, it enables intuitive manipulation of digital images overlaid onto physical space.

Initially conceived as an environment for designing exhibition layouts within virtual space, the project—through a series of user tests—has revealed the potential for new expressive domains where physical and virtual spaces coexist, and for experiential video expressions that unfold within them.

This exhibition presents the MultiChannel MR system itself, along with an interactive area where visitors can operate it using an HMD. In addition, an MR-based video work by Rena OKONOGI (Aichi University of the Arts, New Media & Image) is on view.

Project Lead: Kyo AKABANE (IAMAS)

Technical Development: Masahiro FUSHIDA (Marble Corp.)

Collaborators: Yasunori IKEDA (Aichi University of the Arts, New Media & Image), Yushi YASHIMA (Aichi University of the Arts, New Media & Image)

Artwork: Rena OKONOGI (Aichi University of the Arts, New Media & Image)

Using mixed reality (MR) technology, this work imagines the hidden stories embedded within everyday urban scenery. When I visited Shinjuku, what struck me was a landscape that could hardly be called beautiful, yet possessed a distinctive charm born of its clutter and chaos. Graffiti on walls, stickers on pillars, cigarette butts discarded on the street, and a broken outdoor electrical outlet—each is a trace of someone’s action, a remnant of intentions left behind without explanation, inviting us to imagine a fragment of the past. Behind these traces lie brief moments of choice or impulse. The urban landscape seems to hold within it such small narratives.

By layering new stories onto traces that usually go unnoticed, the work creates an additional stratum within familiar surroundings through MR. It seeks to reveal fleeting moments in which these overlooked remnants quietly surface within the city.

Rena OKONOGI (Aichi University of the Arts, New Media & Image)

Application Development: Masahiro FUSHIDA (Marble Corp.)

AR Audio Guide is a smartphone application that focuses on the relationship between “place” and “sound” through the use of augmented reality (AR) technology. By analyzing the smartphone’s camera feed and built-in sensor data, the system estimates the user’s position in real time and automatically plays pre-set audio corresponding to specific locations. This makes it possible to create an interactive acoustic environment—a “soundscape overlay”—within an exhibition space, or to naturally provide background information and commentary on artworks.

In this exhibition, a work guide utilizing the soundscape overlay function of AR Audio Guide is implemented within the exhibition guide app glow in/complete. When visitors stand in front of an artwork, the system automatically estimates their position and plays the corresponding audio guide. The system also supports “map interaction”, which allows users to scan multiple marker images printed on paper to trigger related audio content—enabling navigation through sound in both spatial and printed media.

The exhibition features, as an example of the soundscape overlay, the application of this system in the ICC Kids Program 2025 “Mixed Realities: Finding Your ‘Compass’ in the Information Jungle”, along with a related workshop from the same exhibition. As an example of map interaction, it presents Tomoko UEYAMA’s Sound Archive in Motion—Chikusa. In addition, a new work by Miki HIRASE explores AR Audio Guide as a creative medium in its own right, expanding its expressive possibilities.

Technical Development: Yo SASAKI, Masahiro FUSHIDA (Marble Corp.)

Design Direction: Akiko KYONO (FLAME Co., Ltd.)

Sound Design & Mixing: Tomoko UEYAMA (asyl Co., Ltd.)

Overall Direction: Kyo AKABANE (IAMAS)

This exhibition guide app was developed specifically for the current show. It features an “Active Guide Mode” that plays audio commentary for each work according to the visitor’s movement through the space. When this mode is activated within Shinjuku Ganka Gallery—Space Underground, audio corresponding to each exhibit is automatically played in sync with the visitor’s position. The app provides an intuitive sound-based navigation experience that integrates naturally with the physical exhibition environment.

Download the iPhone app glow in/complete here (free)

https://apps.apple.com/jp/app/ar-audio-guide/id6642662325

Planning & Direction: Akiko KYONO (FLAME Co., Ltd.), Runa MITSUNO (IAMAS)

UI Design: Akiko KYONO (FLAME Co., Ltd.)

App Implementation: Yo SASAKI

Technical Development: Yo SASAKI, Masahiro FUSHIDA (Marble Corp.)

Supervision: Kyo AKABANE (IAMAS)

As AR technology, which allows virtual elements to be experienced overlaid onto real space, becomes more familiar in everyday life, is our ability to imagine the “virtual” within the mind beginning to change?

Starting from this question, this work uses sound and the AR Audio Guide as its medium.Following the spoken instructions, viewers imaginatively build invisible lines, planes, and shapes within the exhibition space—experiencing the very process itself as a kind of practice.

Miki HIRASE

Technical Support: Yo SASAKI, Masahiro FUSHIDA (Marble Corp.)

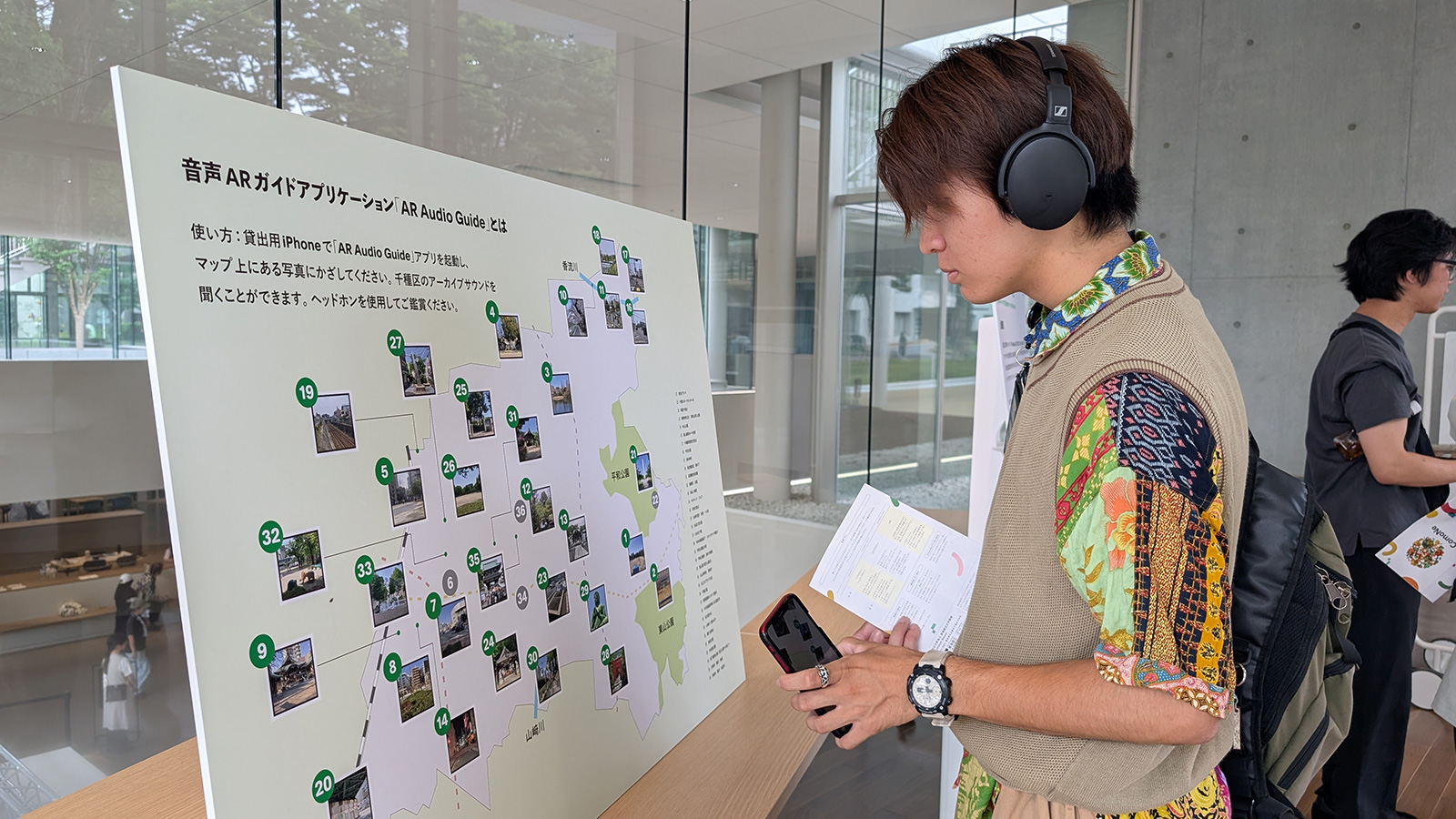

This project collected the regional soundscapes of Chikusa Ward, Nagoya, capturing a diversity of local sounds to rediscover the area’s culture, daily life, and historical background through sound. This project explores and creates new connections between the local community and its listeners.

Focusing on the area surrounding Common Nexus (ComoNe), newly opened within Nagoya University, the work forms a sound archive that captures the everyday sonic environment of Chikusa and connects it to the listener’s present moment. Using the audio AR smartphone application AR Audio Guide, UEYAMA created a sound installation based on this archive.

In this exhibition, the work is presented as a “map interaction”, where visitors can move their smartphones over a map marked with recording points to listen to archived sounds corresponding to each location.

Planning Director, Recording, Sound Design: Tomoko UEYAMA (asyl Co., Ltd.)

Planning & Production: Kyo AKABANE (IAMAS)

Design: Akiko KYONO (FLAME Co., Ltd.)

Technical Development: Masahiro FUSHIDA (Marble Corp.), Yo SASAKI

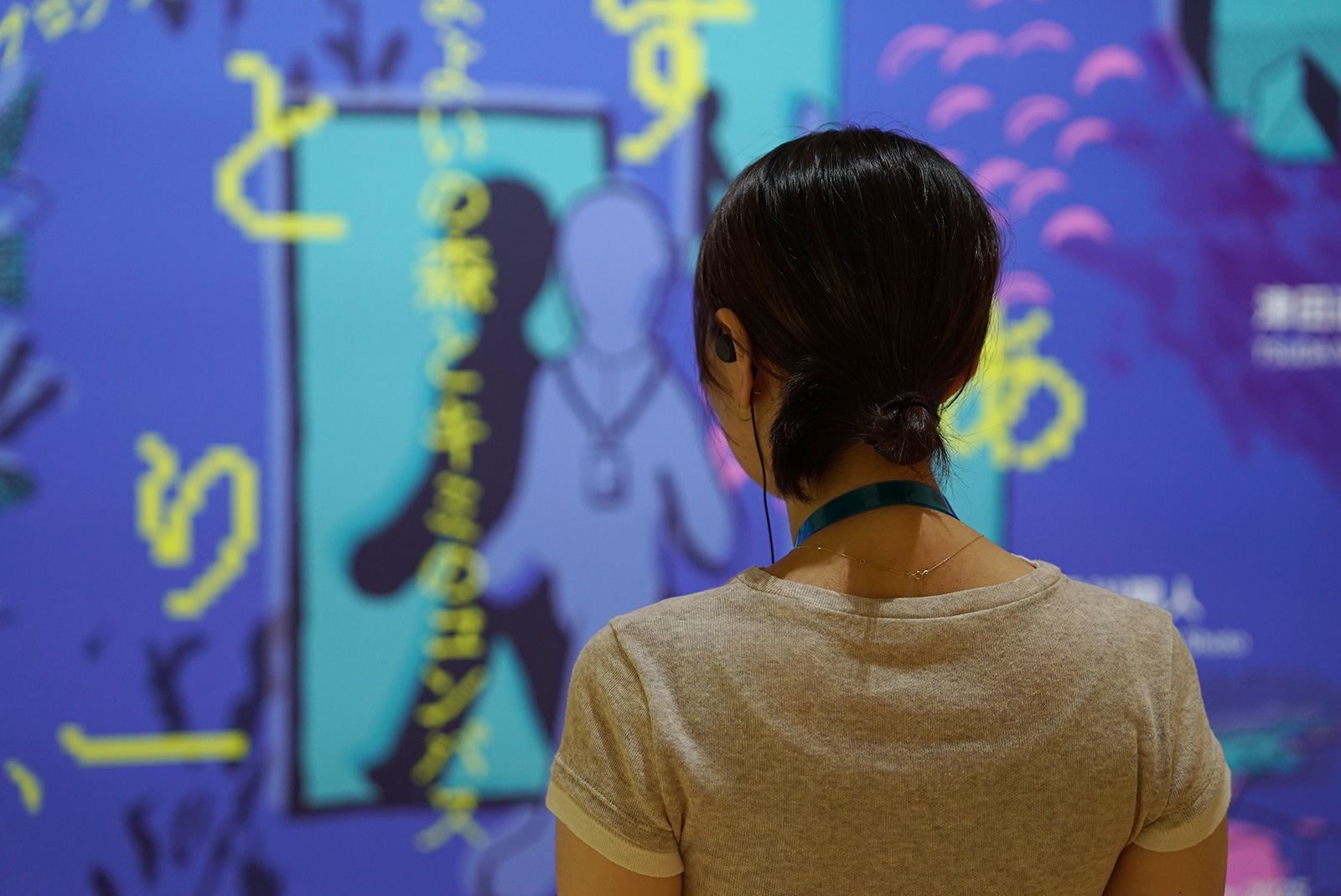

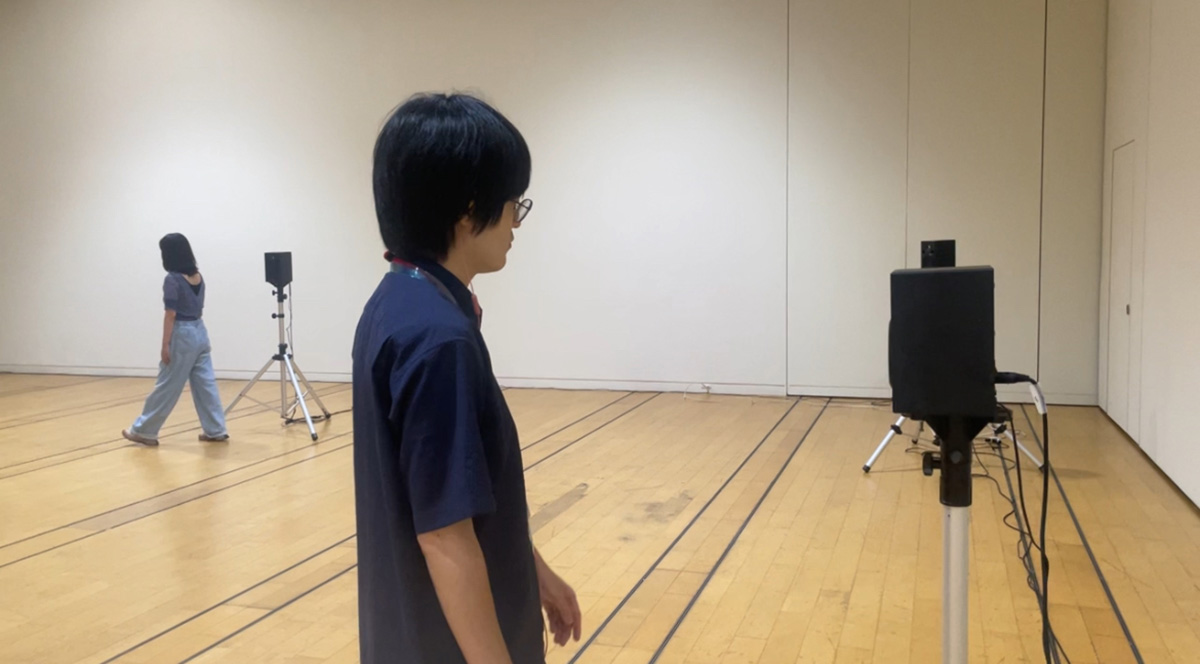

The work presents a “sonic Mixed Reality” where the physical sound field and individual auditory layers coexist. It invites the audience to re-listen to the world through resonance, reaction, and the interplay of sound. Each speaker placed throughout the exhibition space emits a distinct tone; as a visitor approaches, the sound is “acquired,” and the tone reproduced through earphones overlaps with the surrounding acoustic environment. As multiple visitors gather, their individual sound layers intersect and interfere, giving rise to spontaneous rhythms and harmonies.

Reasonance redefines listening not as passive reception but as a creative act—an act of shaping space through sound.

Produce & Direction: Masahiro FUSHIDA (Marble Corp.), Yo SASAKI

Photo courtesy of NTT Intercommunication Center [ICC]

The Sound Mapping Workshop—Invisible Things, Audible Sounds was held as part of the ICC Kids Program 2025 “Mixed Realities: Finding Your ‘Compass’ in the Information Jungle.”

Using the functions of the audio AR smartphone application AR Audio Guide, participants created “sound maps” by imagining unseen landscapes through the sounds they heard.

This exhibition presents a documentary video from the workshop, capturing the participants’ experience of perceiving space through sound and how their auditory perception expanded into spatial imagination.

Planning & Production: a-semi (Shinya KITAJIMA, Runa MITSUNO, Rahmat Muhammad Fikri ZIKRI / IAMAS)

Technical Development: Masahiro FUSHIDA (Marble Corp.), Yo SASAKI, Shinya KITAJIMA (IAMAS)

Supervision: Kyo AKABANE (IAMAS)

Cooperation: Institute of Advanced Media Arts and Sciences [IAMAS]